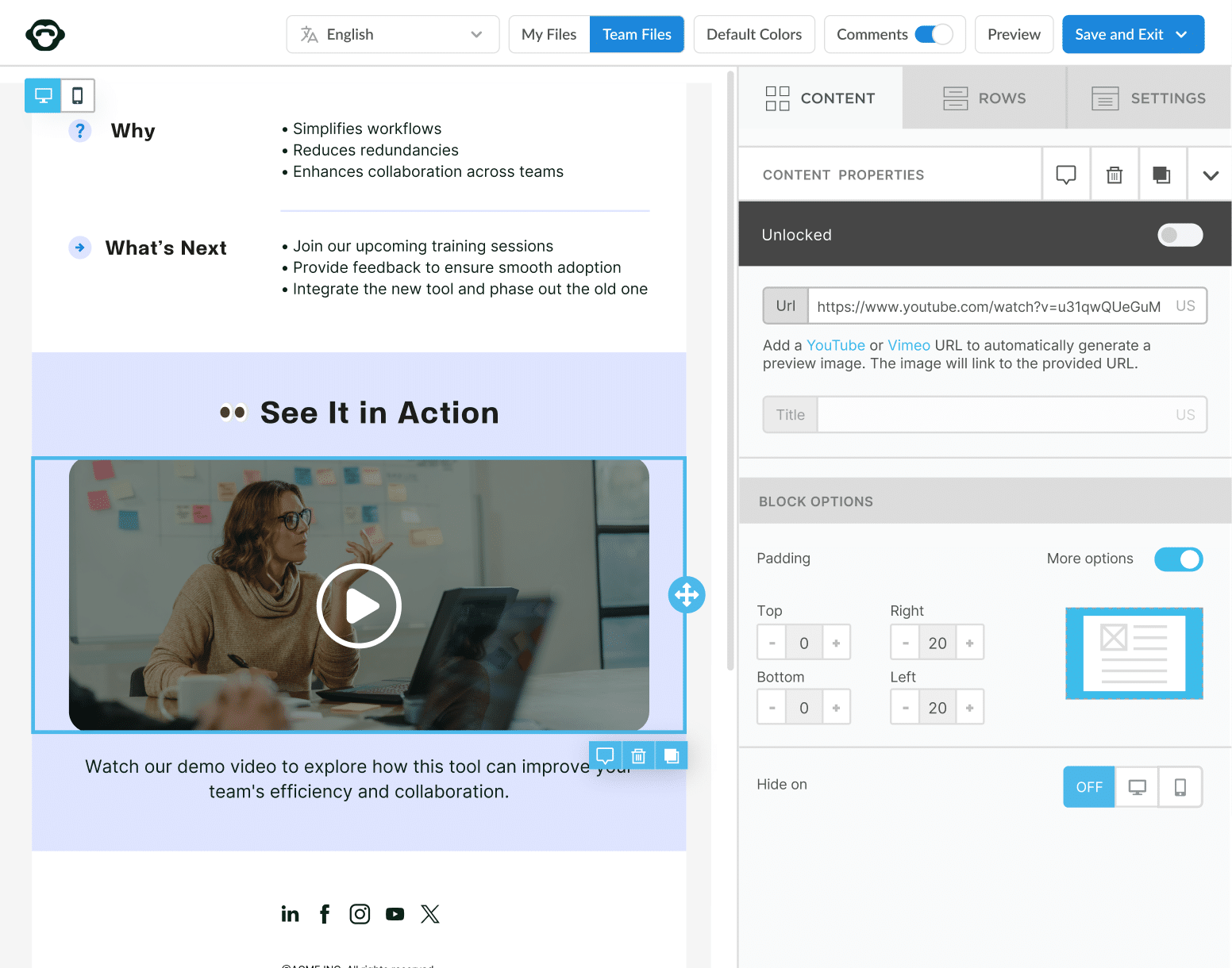

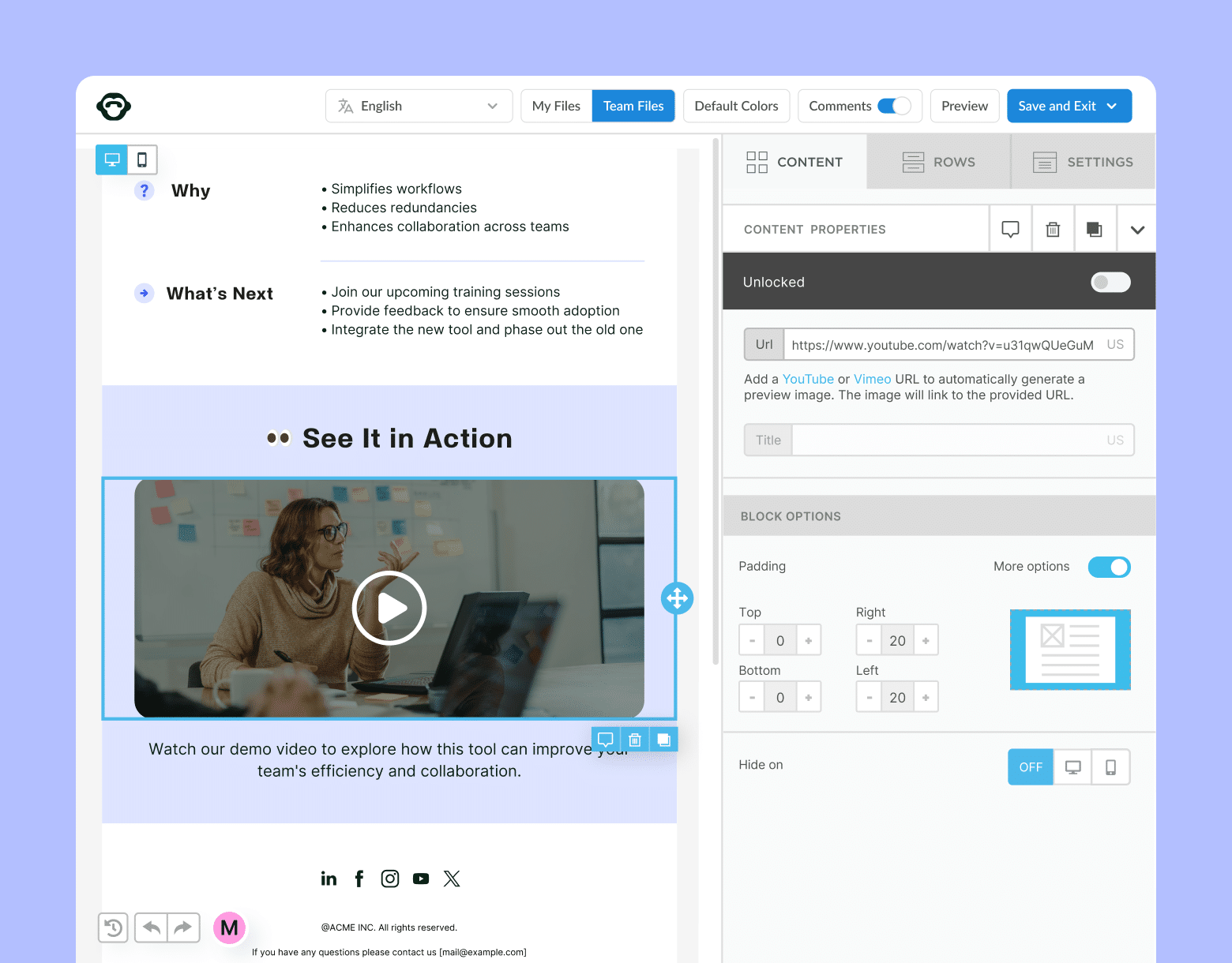

Take a self-guided tour of ContactMonkey

See how our key features can streamline your internal communications.

Take product tour

Will AI replace human communicators, or is that a myth?

The honest answer is nuanced. Parts of our work will absolutely be replaced. Entire roles will not. Communications jobs aren’t a single function. They’re dozens of tasks bundled together under one title. What AI is really doing is forcing an uncomfortable reckoning: a lot of what passed for “strategic communications work” was actually routine execution in disguise.

When AI can produce a passable press release in seconds, it becomes harder to pretend that drafting alone is the value. That pressure isn’t a threat, it’s a clarification. It forces us to get honest about where human expertise actually matters.

AI is excellent at the work many communicators find tedious: first drafts, data analysis, catching basic errors, monitoring at scale, and repurposing content. What it cannot do is understand context, navigate organizational politics, or make nuanced judgment calls. It doesn’t read a room. It doesn’t understand history, power dynamics, or trust. And it doesn’t carry accountability.

The real value of communications lives in the messy, human parts of the job: sensing when something will land badly, knowing when to deviate from the script, building trust across stakeholder groups, and making ethical decisions when the efficient option isn’t the right one. AI can help you write faster. It cannot decide what’s worth saying, or when silence is the better choice.

There is a generational divide here. Many younger professionals are optimistic about AI’s potential. More experienced communicators tend to emphasize the irreplaceable human elements. Both perspectives are valid. The future belongs to people who can hold both truths at once and evolve their expertise beyond what machines can replicate.

What AI Can Realistically Do Today and Where It Breaks Down

AI is genuinely useful in several areas. It can draft content quickly, summarize large volumes of information, brainstorm ideas, repurpose content across formats, catch surface-level editing errors, and personalize messages at scale. For many communicators, it feels like having a tireless research assistant at your elbow.

But there are real limitations that cannot be ignored.

AI still hallucinates, sometimes at alarming rates. Even newer models designed to “reason” through problems can fabricate information, and in some cases do so more frequently than earlier versions. This means everything AI produces must be rigorously fact-checked. Many knowledge workers report spending significant time each week verifying AI outputs, and some users have acknowledged making business decisions based on incorrect AI-generated information.

AI does not understand truth. It predicts plausible language. That distinction matters.

There are also things AI fundamentally cannot do. It does not understand your organization’s culture or institutional memory. It doesn’t know why a particular phrase will upset a school board or why a CEO reacts strongly to certain language choices. It cannot read tone, interpret silence, or take responsibility when something goes wrong. When a crisis hits, there is no AI sitting in the room owning the outcome.

The technology is advancing quickly. Human judgment still has to be the point.

Partner or Competitor?

The idea that “AI is your competitor” is largely a distraction. AI is not trying to build a career, impress leadership, or outperform colleagues. It has no ambition and no agenda. It is a tool—an extremely capable one—but still a tool.

The real competition has always been, and will continue to be, between humans who learn to work effectively with these tools and those who don’t.

When people understand how AI works and have even basic exposure to it, fear tends to drop and curiosity increases. For communicators, this shift matters. AI can take on the work that drains time and energy, allowing humans to focus on judgment, strategy, and relationships.

A useful mental model is this: AI is a highly capable assistant that never gets tired and processes information at inhuman speed—but has no lived experience and cannot read a room. You would never hand that assistant full control of crisis communications. But it would be equally shortsighted not to use it to reduce unnecessary workload.

The question isn’t whether AI is a partner or a competitor. It’s whether you’re becoming the kind of communicator who knows how to work with it—or the kind who gets overtaken by people who do.

Is AI Adoption Really Happening as Fast as the Hype?

The answer is both yes and no.

Communicators are experimenting heavily. Many professionals already use personal AI tools for work, and organizational AI adoption has increased rapidly in a short period of time. In practice, AI is already inside most organizations, whether leadership planned for it or not.

What’s moving much more slowly is thoughtful, embedded adoption. Governance, training, workflow integration, and shared guardrails lag behind experimentation. Many leaders say they are investing in AI, but far fewer feel confident that those investments are translating into real progress.

There is also a gap between perception and reality. Employees are often using AI more than leadership realizes, while many communications professionals report receiving little to no formal AI training. As a result, people are improvising by using tools they trust, learning on their own, and trying to stay productive without clear guidance.

So yes, AI is being adopted quickly, but it remains chaotic and uneven. It is not yet being adopted well. That gap represents a major opportunity for communications teams. Real integration looks less flashy than headlines suggest. It looks like clear policies, simple workflows, consistent training, and realistic expectations. It prioritizes trust over speed.

Which Communications Tasks Are Most Likely to Be Automated?

Much of what consumes time in communications isn’t strategic, it’s mechanical. High-volume, repetitive, rules-based work is exactly where AI performs best.

Tasks already being automated or well on their way include:

- First drafts of press releases, internal announcements, social posts, newsletters, and talking points

- Media monitoring and sentiment scanning at scale

- Email triage and drafting routine responses

- Meeting transcription, summaries, and action items

- Repurposing content across channels

- Basic editing, proofreading, and consistency checks

- Analytics compilation and draft reporting

What remains human is interpretation. Determining what matters, assessing risk, shaping narrative, advising leadership, and deciding how and when to communicate are still deeply human responsibilities.

The strategic shift isn’t just about automation—it’s about attention. When AI clears the mechanical layer, it creates space for higher-value work. Stakeholder intelligence, timing, political awareness, emotional judgment, and sense-making become the real differentiators.

New Opportunities for Communicators

Some new job titles already exist, largely because organizations need people who know how to use AI well and keep them out of trouble. But there are also clear opportunities for communicators to create roles that don’t formally exist yet, such as:

- Synthetic Media Response Strategist – owning detection, response, and communication when deepfakes or AI-generated misinformation emerge

- AI Workflow Architect – designing how human and AI work is sequenced for quality and trust

- Executive AI Translator – explaining what AI can and cannot do in clear, non-technical language

- Data and Insight Storyteller – turning AI outputs into meaningful narratives

- Voice and Brand Guardian – protecting tone, authenticity, and differentiation

- Trust and Transparency Lead – shaping ethical use, disclosure norms, and stakeholder trust

The work isn’t disappearing. It’s splitting and specializing, and communicators are well positioned to step into these spaces early.

Building AI Literacy Without Becoming Technical

You don’t need to learn how AI works at a technical level to use it effectively.

Modern AI systems are controlled primarily through language. Clear context, constraints, examples, and iteration are what produce good outputs. That’s not a technical skill. That is communication.

AI literacy comes from use. Experiment regularly. Push tools until they fail. Learn their limits by testing them on topics you already understand well. Develop strong verification instincts and assume anything important needs to be checked.

You don’t need to become technical. You need to become thoughtfully experimental.

What Responsible AI Use Really Means in Communications

Responsible AI use isn’t just about disclosure. It’s about appropriateness.

Audiences don’t lose trust simply because AI was used. They lose trust when AI is used in moments where a human was expected to show up. The real question isn’t “Should we disclose this?” It’s “Should AI be used here at all?”

AI does not belong in moments requiring empathy, judgment, or relational intelligence—crises, sensitive internal communications, or stakeholder conflict. Even with disclosure, those uses can feel like outsourced care.

AI does make sense in drafting, summarizing, sorting, and background work. Responsibility means doing the human work where it matters most and using AI where it doesn’t break trust.

Accuracy, Bias, and Misinformation

General-purpose AI cannot be trusted to verify facts on its own. It predicts language, not truth. Even tools that ground outputs in source documents are not foolproof.

Bias is inherent. These systems reflect the assumptions and gaps in their training data. Reducing risk requires human verification, diverse review, clear prompting, and ongoing attention. There is no one-time fix.

AI increases responsibility, it does not remove it.

The Human Experience

AI is changing how people learn by shifting from front-loaded training to just-in-time support. It can personalize learning, adapt to skill gaps, and offer judgment-free practice. Used well, it deepens understanding. Used poorly, it encourages shallow retrieval instead of real learning.

AI can personalize communication at scale, but personalization is not the same as human connection. Personalization is data. Human touch is attention. AI can scale relevance. Only humans can provide presence.

Over-automation risks tone-deaf communication, eroded trust, and the loss of early warning signals that come from real interactions. AI should free communicators to pay closer attention, not step away completely.

Looking Ahead

In the coming years, AI will likely fade into the background as infrastructure, noticed mainly when something goes wrong.

The real shifts communicators should prepare for are practical:

- More synthetic content, increasing the value of verification and credibility

- More automation of routine work, freeing time for judgment and strategy

- Greater emphasis on empathy and emotional intelligence

- Higher stakes around trust and transparency

This isn’t a crisis. It’s a re-centering. Technology speeds up the easy parts so communicators can focus on what has always mattered: clarity, judgment, and care.

Final Thought

This moment isn’t about chasing every new tool. It’s about understanding your role as a sense-maker in a noisy, fast-moving environment. Communicators who thrive will be the ones who stay grounded, think clearly, and keep the work human, especially when the technology doesn’t.

That’s not doom. That’s opportunity.

Explore the opportunity with AI for internal communications by booking an obligation-free chat with one of our experts!